ACEPWG Level 1 intercomparison (radiances and degree of linear polarization)

One way to assess instruments is to intercompare their Level 1 products, which are the calibrated radiances, reflectance, degree of linear polarization or other radiometric values that serve as inputs to geophysical parameter retrieval algorithms. If pixel to pixel comparisons between pairs of instruments agree within their joint uncertainties we know that those instruments are operating as expected. This knowledge can also be very informative when assessing comparisons of retrieved (Level 2) products.

Here, we present the results of an ongoing Level 1 comparison between the AirMSPI and RSP instruments (PACS data are not yet available for comparison). The results of this comparsion do NOT indicate that one instrument is 'right' and another is 'wrong', only that they jointly agree or disagree within expected uncertainty bounds. Resolution of disagreements can be found by instrument teams that reassess their data generation procedures, calibration techniques, and uncertainty estimation models.

Our Level 1 intercomparison involves the following steps

- Identification of appropriate comparison scenes and data. Three polarization sensitive spectral channels overlap for our AirMSPI and RSP comparison: 470nm, 660/670nm (AirMSPI/RSP), and 865nm. AirMSPI has five other non-polarization sensitive channels in the UV-VIS-NIR, while RSP has six other polarization sensitive channels in the VIS-NIR-SWIR.

- For each intercomparison point:

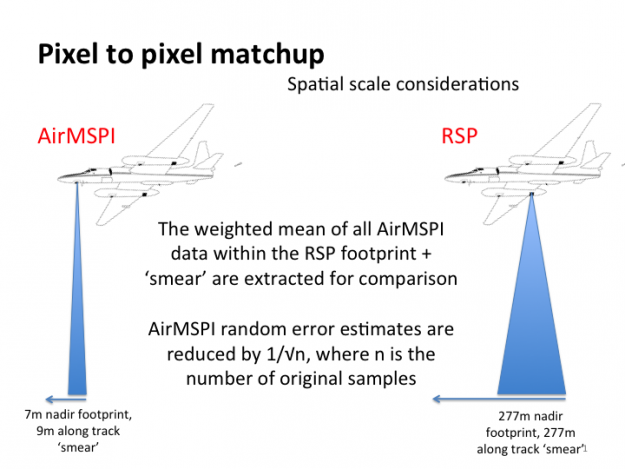

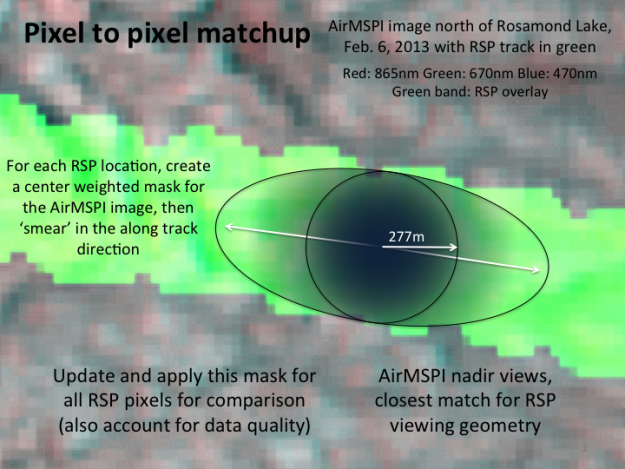

- Find comparison pixel surface geographic coordinates for the instrument with the coarsest spatial resolution (RSP).

- Identify all pixels for fine spatial resolution data (AirMSPI) within the coarse spatial resolution instrument's footprint.

- Calculate weighted averages of fine spatial resolution data within the coarse spatial resolution instrument's footprint. Weighting accounts for falloff at edges and along track 'smear'. Account for data quality and remove pixels with less than optimal quality.

- Identify mean viewing angle for the fine spatial resolution data, and find the closest viewing geometry among the coarse spatial resolution data.

- Compute expected uncertainties for both fine and coarse resolution datasets. Modify the random uncertainty of the fine spatial resolution data to account for averaging.

- Normalize biases by joint instrument uncertainty, and assess.

Intercomparison scenes

Thus far, six PODEX scenes in California and the nearby coastal Pacific Ocean have been compared, creating a total of 431 matchup points. These scenes were selected for their individual spatial homogeneity (to minimize sensitivity to the spatial registration process) and for the overall range of reflectance and polarization values.

A spreadsheet describing the comparison scenes, including geolocation data and original filenames, is here.

Pixel to Pixel Matchup Technique

Matchup Results

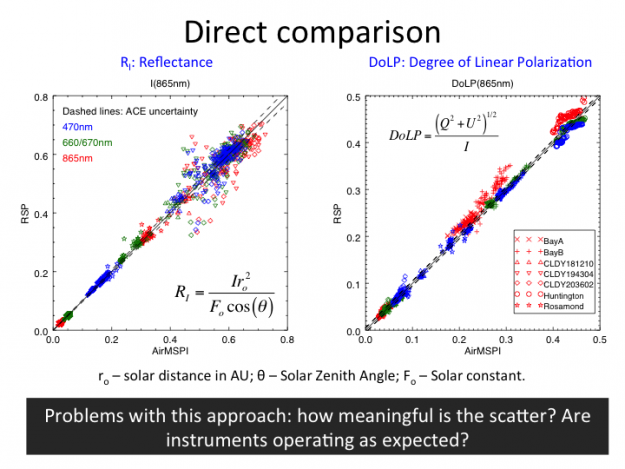

Level 1 matchups between RSP and spatially averaged AirMSPI data within the footprint are presented below. The scatterplots between the pairs of measurements (first figure) are a common way of presenting differences. As we can see, there is a high degree of correspondence between the data - as we would hope. But how meaningful are the differences? We show dashed solid lines, which are the ACE instrument uncertainty requirements, for reference, but this does not tell us if our instruments are operating as we expect. We therefore prefer to represent our matchups like in the second figure, where differences between pairs of measurements are normalized by the sum of AirMSPI and RSP uncertainties ( Difference = (RSP-AirMSPI) / (UncertaintyRSP + UncertaintyAirMSPI) ). In this representation, a value of +1 means that the RSP data point is larger than the AirMSPI data by an amount equal to the sum of their (1 sigma) uncertainties. If these differences are Gaussian distributed, 95% would have an absolute value of 1.96 or less. We use this as a test, so if less than 95% are within these bounds, the uncertainty estimates of one or both instruments underrepresent the uncertainty encountered in the scene.

The RSP-AirMSPI difference plots show that 95% or more matchups of scene reflectances agree within the +/- 1.96 range, meaning that the uncertainty models of both instruments collectively represent the total (unpolarized) reflectance property. This is not the case for the Degree of Linear Polarization (DoLP), which is the ratio of linearly polarized to total reflectance. The worst channels are 470nm and 865nm, where only 69% and 54%, respectively, agree within uncertainty expectations. This means that the models used to characterize instrument uncertainty for polarization underestimate the total uncertainty, although this analysis cannot pinpoint which instrument is the source (and it could be both).

Next Steps

Planned updates and improvements to this intercomparison include the following:

- Inclusion of PACS data when they become available. Uncertainty models for that instrument are also required.

- Addition of other PODEX scenes, particularly those under investigation by the ACE Polarimeter teams for Level 2 analysis.

- Addition of SEAC4RS and HyspIRI scenes (RSP and AirMSPI only)

- Comparison at viewing angles other than the near-nadir matchups used in this analysis.

NEW! (as of December, 2015)

Both RSP and AirMSPI datasets have had several changes that improve their intercomparison. AirMSPI improved its calibration, while RSP improved its geolocation. Compared using methodology described above, the instruments continue to agree within stated uncertainties for reflectances, and are much better for the comparison of DoLP. The latter, however, still do not agree within stated uncertainties.

More details can be found here. However, this should not be considered the final assessment of the data. The AirMSPI geolocation for the data assessed here were performed by hand. This comparison will be repeated once automated geolocation is applied to AirMSPI data and the data are made available publically.

Suggestions for additional improvements are welcome.